The Netherlands Institute for Sound and Vision has released its new White Paper, Towards a New Audiovisual Think Tank for Audiovisual Archivists and Cultural Heritage Professionals. The White Paper, the first effort from a new ‘AV Think Tank’ on audiovisual archiving initiated by Sound and Vision, aims to facilitate stakeholders active in the preservation of audiovisual heritage to identify their strategic priorities over the coming decade. The paper also puts forward 10 recommendations for collective action to address these priorities.

Written by Peter B. Kaufman of Intelligent Television and MIT, the White Paper is the result of months of open conversation with leading experts in the field representing various positions, archives, and cultural heritage institutions. The White Paper was publicly presented at the FIAT/IFTA 2017 World Conference in October in Mexico City, and input from many of the delegates was incorporated.

Johan Oomen, head of R&D at Sound and Vision, stated, “This White Paper is one of the first steps that we are taking to establish a new international thought leadership group – the AV Think Tank – with experts from around the globe. Through the AV Think Tank, we aim to lay the groundwork for an AV archiving sector that enables more long-term use of its assets, more learning, and more educational activity.”

The White Paper is meant to start a conversation with the audiovisual archiving and cultural heritage communities and within the AV Think Tank itself. It addresses the current context of the work that audiovisual archivists and cultural heritage professionals do and many of the challenges they face. It puts forth ten recommendations as strategic priorities for the coming ten years around which action, research and development, and resources could be organised. Each recommendation is accompanied by a timeline of first collective actions to take.

The AV Think Tank is committed to following up on these recommendations through a series of activities. The AV Think Tank is creating task forces and working groups focused on these issues. These groups will conduct further research and connect with other relevant stakeholder groups; lay the groundwork for policy and practice recommendations through the creation of handbooks, strategy papers, and other publications; and develop actions plans that connect directly to the interests of AV archivists and cultural heritage professionals. Through these activities, this group of international experts, in consultation with the wider community, is working to articulate an international research and action agenda for our shared strategic priorities for the next ten years.

The White Paper can downloaded directly as PDF here. To join the conversation about our shared strategic priorities, follow and comment on the White Paper on Medium here.

The AV Think Tank invites comment from and conversation with anyone interested in the present and future of our shared moving image cultural heritage.

To contact the AV Think Tank, please email: avthinktank@beeldengeluid.nl

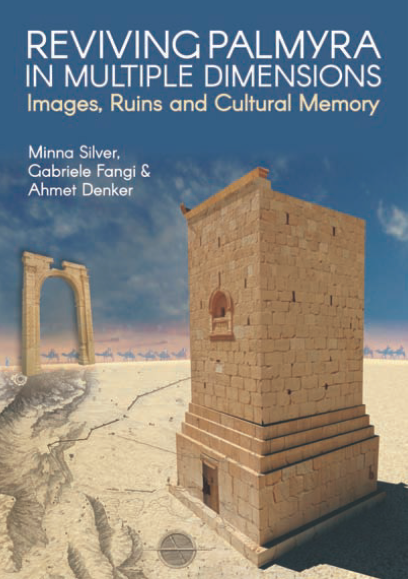

Reviving Palmyra in Multiple Dimensions, images, ruins and cultural memory is a book released in February 2018, by Minna Silver, Gabriele Fangi and Ahmet Denker. This is a classic example of digital archaeology and virtual reconstruction and is a beacon for the recording of international cultural heritage. It provides a comprehensive and fascinating study of this World Heritage Site in Syria, lifting it from the ruins.

Reviving Palmyra in Multiple Dimensions, images, ruins and cultural memory is a book released in February 2018, by Minna Silver, Gabriele Fangi and Ahmet Denker. This is a classic example of digital archaeology and virtual reconstruction and is a beacon for the recording of international cultural heritage. It provides a comprehensive and fascinating study of this World Heritage Site in Syria, lifting it from the ruins.