New Realities: Authenticity & Automation in the Digital Age

In San Francisco, at the epicenter of the digital revolution, DigitalHERITAGE 2018 was organized with an amazing lineup of talks, exhibits, workshops, tutorials, special sessions and more, including:

- Internet Archive founder Brewster Kahle on the future of net

- Prof of Digital Museology Sarah Kenderdine on reframing museums, immersion and more

- California State Archivist Nancy Lenoil on Yosemite and digital archives

- Prof Lin-Shan Lee on breakthroughs in voice search for heritage

- Prof Deirdre Mulligan of the Center for Law & Technology on digital truth

- Dr Brian Fisher on the effort to 3D digitize every ant species on earth, and

- a special conversation on the future of preservation with father of the internet & Google Vice President Dr. Vint Cerf

5 days, 18 federating events, 100s of talks, 3 amazing venues, 50,000 sqft expo, 10 tours

WHAT: The leading global event on digital technology for documenting, conserving and sharing heritage—from monuments & sites, to museums & collections, libraries & archives, and intangible traditions & languages. Featuring keynotes from cultural leaders & digital pioneers, a tech expo, research demos, scientific papers, policy panels, best practice case studies, hands-on workshops, plus tours of technology and heritage labs.

FOCUS: Culture and technology fields from computer science to cultural preservation, archaeology to art, architecture to archiving, museums to musicology, history to humanities, computer games to computer graphics, digital surveying to social science, libraries to language, and many more.

WHO: Some 750+ leaders from across the 4 heritage domains together with industry to explore, discuss & debate the potentials and pitfalls of digital for culture. Heritage and digital professionals, from educators to technologists, researchers to policy makers, executives to curators, archivists to scientists, and more.

WHERE: In the heart of the digital revolution on the waterfront in San Francisco, USA. For the first time outside Europe following our 1st Congress in Marseille in 2013 and 2nd in Granada in 2015.

WHEN: 26-30 October 2018

- Workshop, Tutorials & Special Session Proposals Due online: 15 April 2018

- Papers & Expo Proposals Due online: 20 May 2018

- Notification: 15 July 2018

- Camera Ready Deadline: 1 September 2018

CALL FOR PAPERS, EXPO, EXHIBITIONS >> PDF, 881 Kb

Website: http://www.digitalheritage2018.org

A federated Congress of many leading events, DigitalHERITAGE 2018 included:

2 Conferences:

- the Int’l Society on Virtual Systems and Multimedia: VSMM 2018 – 24th International Conference

- the Pacific Neighborhood Consortium: PNC 2018 – 25th Conference & Joint Meetings

1 Exposition by ARCHAEOVIRTUAL & Italian National Research Council: DigitalHERITAGE Expo & Livestream from Paestum, Italy

+15 Special Events.

DigitalHERITAGE 2018 run 5 days and explores digital innovation and challenges across 4 heritage domains: Built Heritage, Artifacts & Collections, Libraries & Archives, Intangible Culture & Traditions, and across 3 broad groups of digital technology: Reality Capture (digitization, scanning, remote sensing, …), Reality Computing (databases & repositories, KM, GIS, CAD, 3DCG, authoring, archives, …), Reality Creation (VR, AR, MR, games, visualization, multimedia, 3Dprinting, embodiment, …).

The Centre for Image Research and Diffusion (CRDI) of the Girona City Council and the Association of Archivists of Catalonia, are calling the 15th Image and Research International Conference, which will be held in the Palau de Congressos de Girona from 22th to 23th of November. On 21th and 24th, two workshops related to the areas of interest of the Conference will be organized.

The Centre for Image Research and Diffusion (CRDI) of the Girona City Council and the Association of Archivists of Catalonia, are calling the 15th Image and Research International Conference, which will be held in the Palau de Congressos de Girona from 22th to 23th of November. On 21th and 24th, two workshops related to the areas of interest of the Conference will be organized.

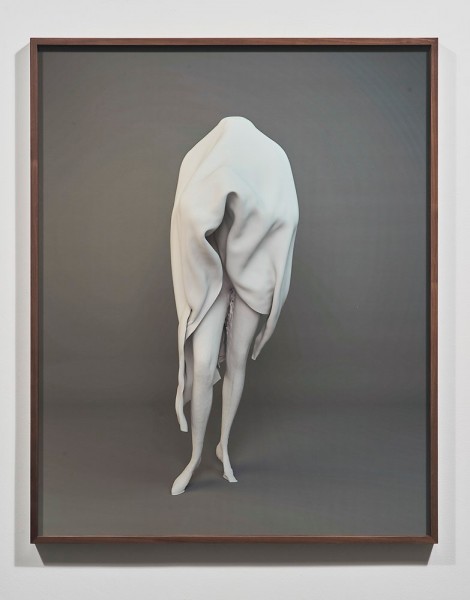

Still Life features a networked video installation, prints rendered from augmented reality, immersive wallpaper constructed from bump map imaging, and gold mined from the California landscape. The installation peels back multiple layers of material translation to reveal a displaced human body within contemporary systems of value creation.

Still Life features a networked video installation, prints rendered from augmented reality, immersive wallpaper constructed from bump map imaging, and gold mined from the California landscape. The installation peels back multiple layers of material translation to reveal a displaced human body within contemporary systems of value creation.

iPRES 2018 – Where Art and Science Meet – The Art In the Science & The Science In the Art of Digital Preservation – will be co-hosted by MIT Libraries and Harvard Library on September 24-27, 2018.

iPRES 2018 – Where Art and Science Meet – The Art In the Science & The Science In the Art of Digital Preservation – will be co-hosted by MIT Libraries and Harvard Library on September 24-27, 2018.